Table of contents

Aand again we're on AI, 'cause I'm not reading enough biochemistry. Next after that - mental models pt. 2 ❣️

Recently we've discussed that neural networks do usually understand shiet ⇢ that got me interested in how can they be made to understand causal relationships?

CausalKG

One approach is definitely causal reasoning. I stumbled upon a paper outlining a possible structure of a causal knowledge graph. Okay, too close to dependent origination from Buddhism, but we'll manage.

Or not

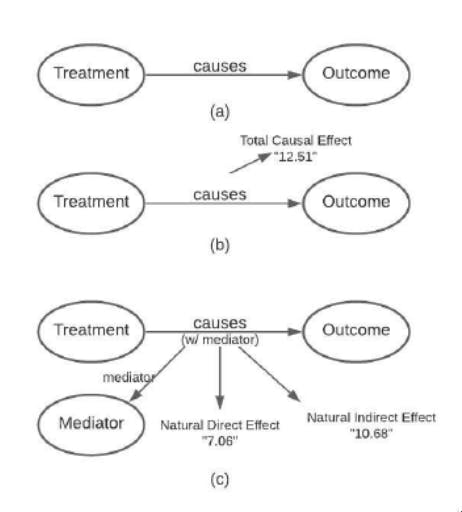

The authors propose the following structure:

[subject ⇒ causes {mediator: , direct:, indirect:} ⇒ object]

Which combines a Bayesian causal network (what causes what) and an ontology network (what is X in some domain, and relation types).

Or, as BinGPT eloquently presents it, "Ontology network for causal learning is a way of using existing knowledge to find out what causes what in a domain."

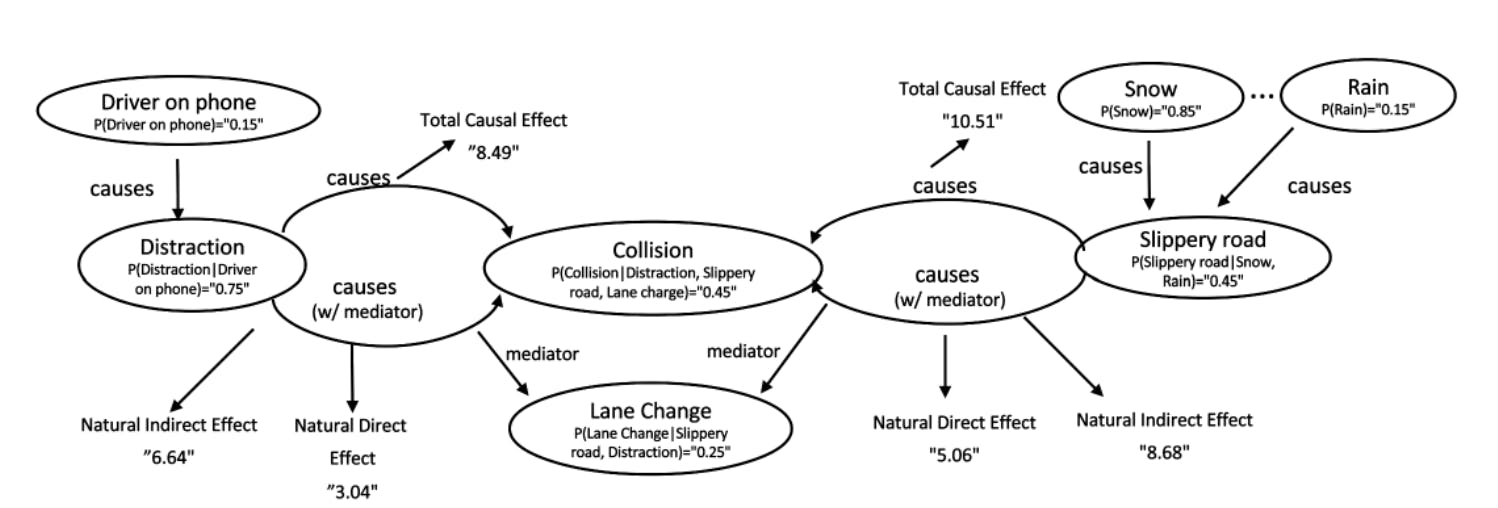

In the end, it comes to a graph like that:

Another visualization can be found here.

CausalUZbekistan

As I'm currently engaged in a startup building an associative database (later AMD, Associative Model of Data) based on S. William's findings, some of the thoughts crossing my mind are "how the f\ck do we combine associative data and causal reasoning?"*

As AMD deals with two atomic data types: items and links, and the startup + its underlying ideas use a modified and unified data structure - a link: [id, type_id, from, to]. So, for a node, we just omit from and to fields. A not-so-brief introduction was made by me here on slides 14-24.

So, we can basically express a subject ⇒ predicate ⇒ object relationship. Can we do better, expressing a causal relationship?

I've thought to combine the strengths of the modified AMD Ryzen with knowledge network construction, expanding it to a semi-fixed set of predicates. "Causes" is great, but LLMs can derive what more complex things mean and when they are used.

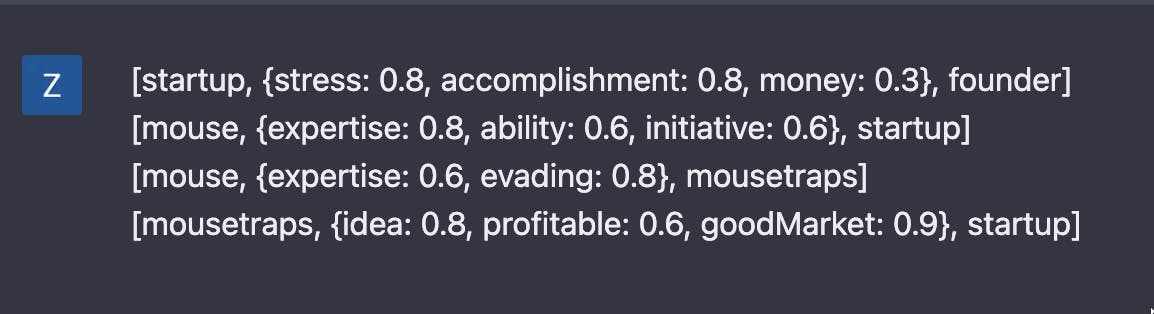

I've thought of the following structure:

So we could use an agreed or expanding set of predicates/causal relationships. The link unit structure will allow pointing (as it's essentially a pointer) the mediator or other modifiers (say, a bias) to another edge:

Field tests (no)

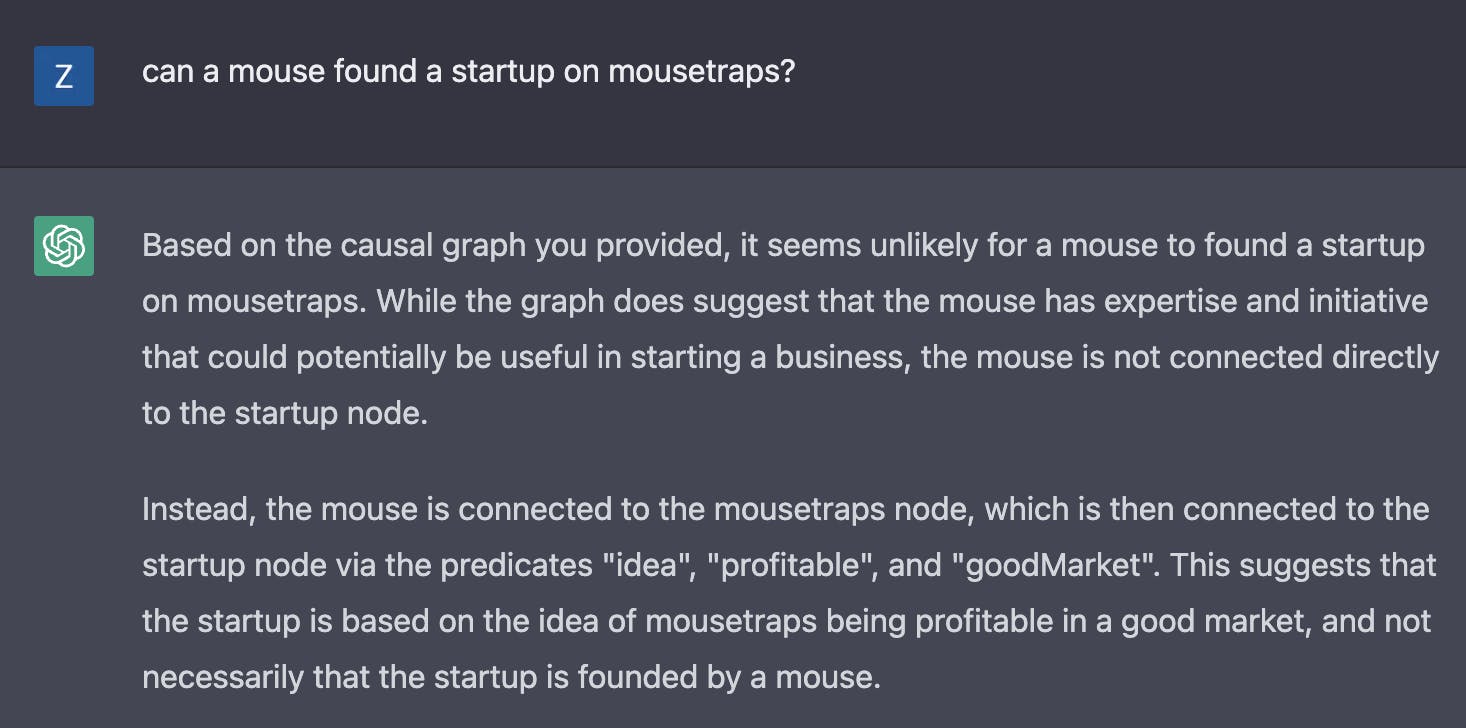

I've tried this with obvious ChatGPT with mixed success:

Anthropic's Claude seems to fare a bit better:

Based on the causal graphs provided, it is unlikely that a mouse can found a startup on mousetraps. While the mouse has expertise, ability and initiative which could be useful for a startup founder, the mouse is more likely to use those capabilities to evade mousetraps than to found a startup focused on mousetraps. The mousetraps -> startup edge suggests that the idea, profitability and good market for mousetraps could lead to a startup, but the mouse -> mousetraps edge suggests the mouse is more likely to avoid mousetraps than build a business around them. So, while possible, it is unlikely based on the provided causal knowledge.

Conclusion

All in all, I believe that the combination of LLMs and the updated causal structure I've provided can help make the world better the networks understand the world a wee bit better, leading to less BS, more usefulness 🤔 Would love feedback on that plz teach to upload to Arxiv.